Transferring data to and from Salesforce is not very difficult. Salesforce data import wizard and Salesforce Dataloader.io tools made the job of data migration a rather point-and-click process. However for repetitive, complex, or large scale data transfer operations, these tools become too difficult.

Talend is one such tool with a great portfolio of data integration and migration offerings that can be used to migrate data to and from Salesforce. The free data migration tool Talend Open Studio, is even popular among our Salesforce developers.

What is Talend?

Talend is an open source data integration platform. It provides various software and services for data integration, data management, enterprise application integration, data quality, cloud storage and Big Data. Using Talend, data becomes more accessible, its quality enhances and it can be moved quickly to the target systems.

Please find below link for the installation and introduction about talend.

Introduction To Talend Integration Tool

Steps to Export data from salesforce in Talend

Step 1: Open Talend and Create a Job

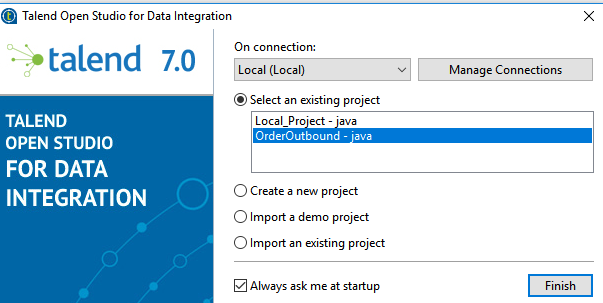

The first step is to open Talend and create a new project. You can create a local project with default credentials or you can access a cloud hosted project through proper login and password.

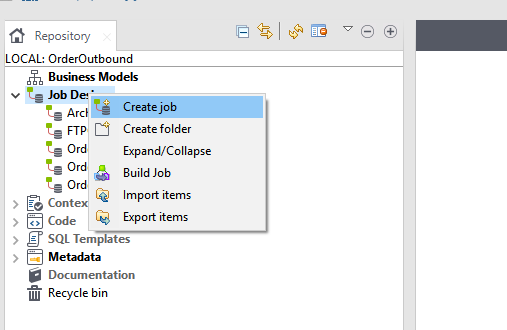

Once you have opened a new project, the first step is to create a new job. In the left side panel labeled Repository, you will find an entry named Job Design. Right click on it and create new Job.

Step 2: Establishing Connections for Export

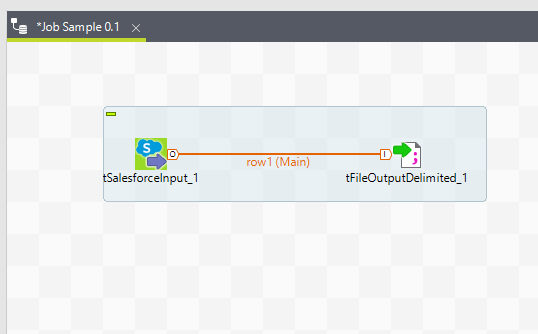

1.Once you have created your Job, you can drag and drop the connection to the Job Designer panel. Drag tSalesforceInput component to extract the data from salesforce . This component can be found in the Palette.

2.Connect the tSalesforceInput component to the tfileOutputDelimited component which outputs data to a delimited file using a Row > Main connection.

Step 3:Configuring the Components

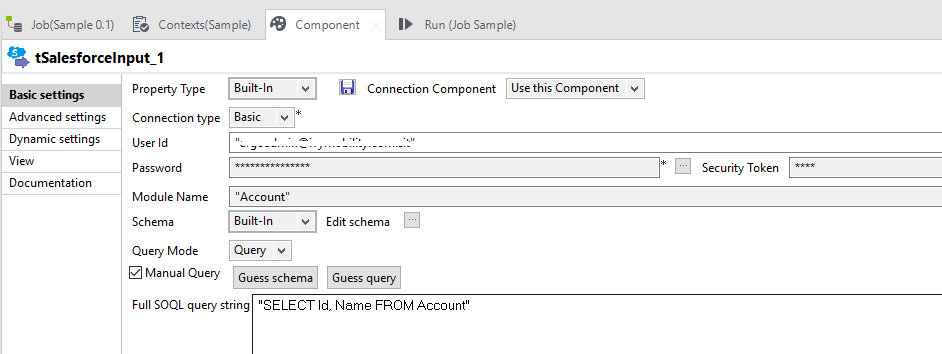

1.Double-click the tSalesforceInput component to open its Basic settings view.

2.In the User Id, Password and Security Key fields, enter the user authentication information required to access Salesforce.

3.Click the […] button next to the Module Name field and in the pop-up dialog box, select the object you want to access. In this example, it is Account.

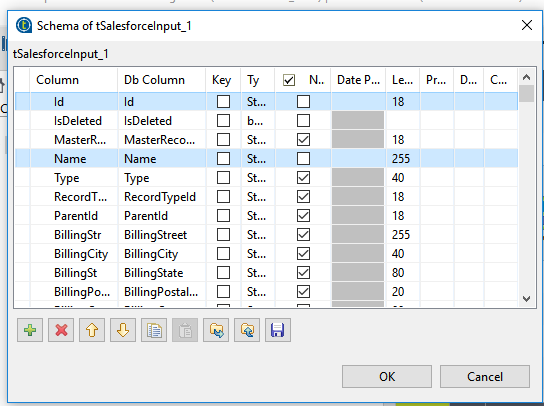

4.Click the […] button next to Edit schema to open the schema dialog box.

5.Remove all columns except Id, Name.

Note that to retrieve a column from a linked object, it is necessary to define the name of the column in a particular manner in the schema editor.

Click OK to save the changes and close the schema dialog box.

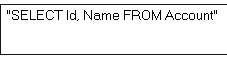

6.Select the Manual Query check box and in the Full SOQL query string field displayed, enter your SOQL statement used to search the data to be retrieved. In this example, the statement is as follows:

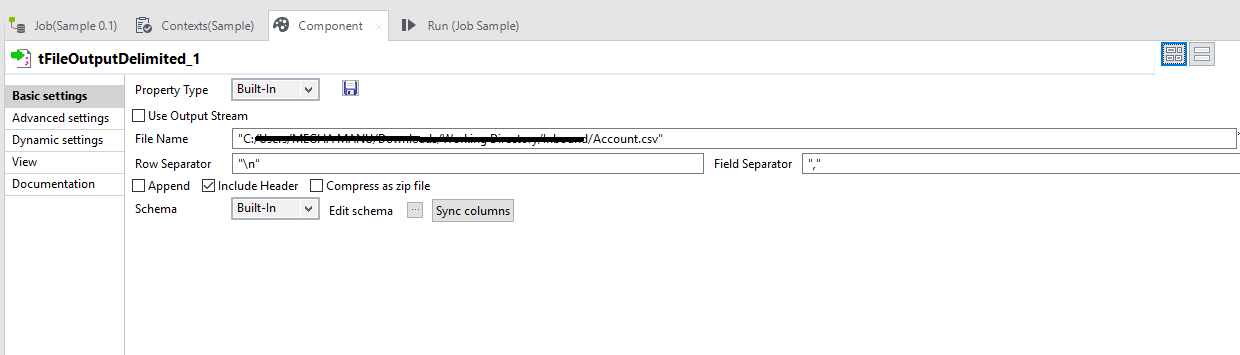

7.Double-click the tfileOutputDelimited component to open its Basic settings view.

8.In the Property Type field, set the type to Built-in and fill in the fields that follow manually.

9.Click the […] button next to the File Name field and browse to the output file you want to write data in, Account.csv in this example(extension can be .txt,.xsls etc).

10.In the Row Separator and Field Separator fields, set “n” and “,” respectively as row and field separators.

11.Select the Include Header check box if you want to output columns headers as well.

12.Click Edit schema to open the schema dialog box and verify if the recuperated schema corresponds to the input schema. If not, click Sync Columns to recuperate the schema from the preceding component.

13.Press Ctrl+S to save your Job.

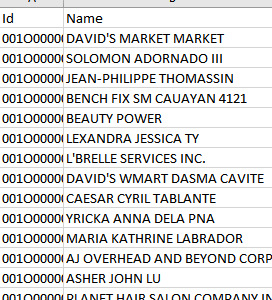

14.Press F6 or click Run on the Run tab to execute the Job.

The two specified columns Id, Name are output in the defined output file.

Note: There is no built-in limit to the number of records you can output with a tFileOutputDelimited. Once you get into really HUGE (1B+) numbers of records, you do need to start considering resources on the server and may find that your particular job and hardware configuration imposes a limit on the number of records you can process ( disk space, memory, etc… )